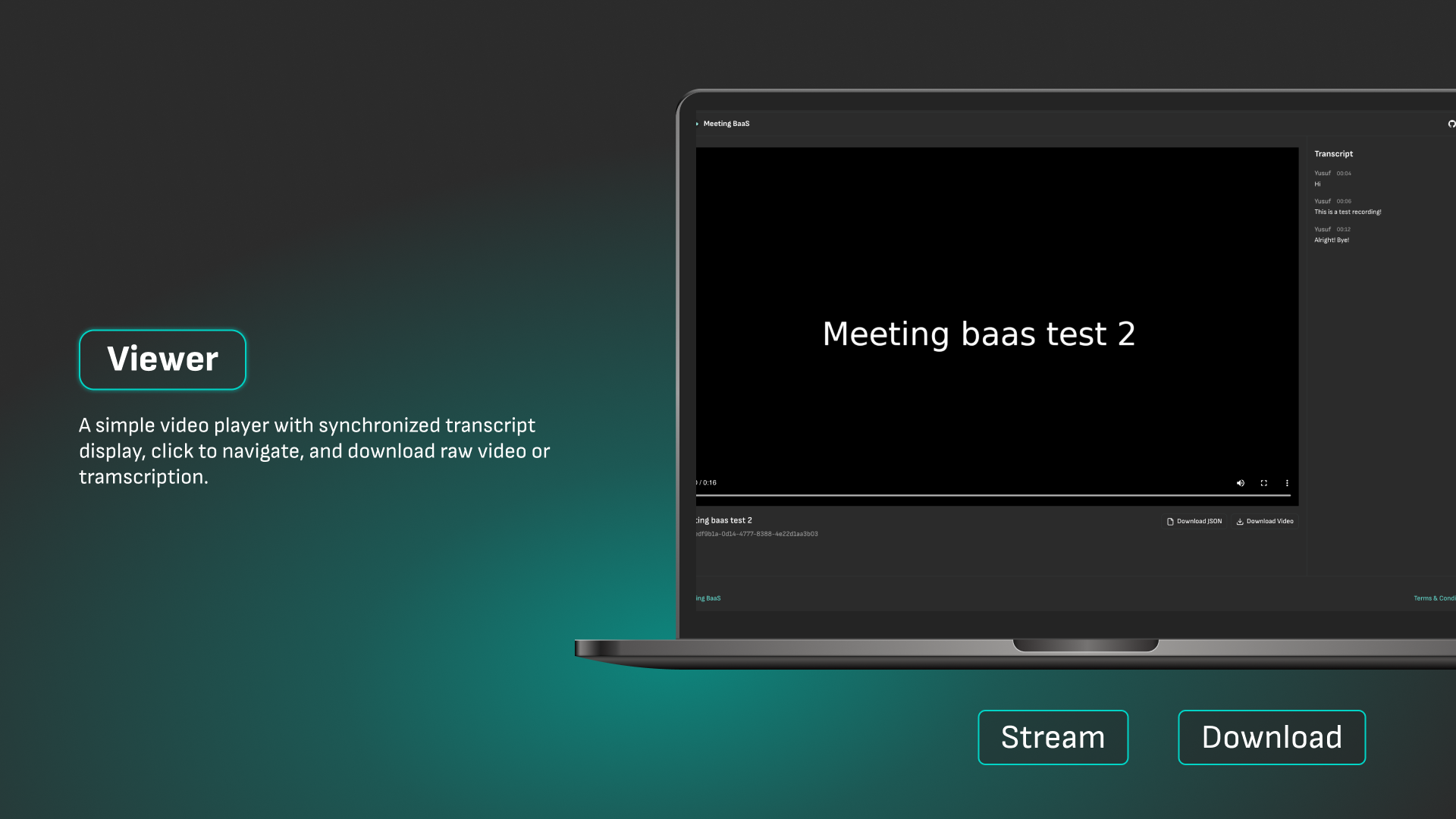

Meeting BaaS Viewer

A video viewer interface built with Next.js to view meeting recordings, access and navigate through transcripts.

A video viewer interface built with Next.js to handle view meeting recordings, access and navigate through transcripts with real data from your meeting bot recordings.

Overview

This Next.js application provides a comprehensive video player with synchronized transcript display for meeting recordings captured by Meeting BaaS bots. Key features include:

- Video playback with standard controls

- Synchronized transcript display with real meeting data

- Current word highlighting in the transcript

- Video navigation by clicking on transcript words

- Responsive interface with resizable split view

- Real-time data from your meeting bot recordings

- Authentication integration with centralized auth app

Tech Stack

- Framework: Next.js 15.3.2

- Language: TypeScript

- Styling: Tailwind CSS 4

- UI Components: Shadcn

- Authentication: Centralized Auth app integration

- Package Manager: pnpm

Key Features

- Real Meeting Data: No more mock data - uses actual recordings and transcripts from your Meeting BaaS bots

- Advanced Video Controls: Full video.js integration with custom controls

- Interactive Transcripts: Click any word to jump to that moment in the video

- Speaker Identification: Clear speaker labels and timestamps

- Responsive Design: Works seamlessly on desktop and mobile devices

- Authentication: Secure access to your meeting recordings

Getting Started

Prerequisites

- Node.js (LTS version)

- pnpm 10.6.5 or later

Installation

-

Clone the repository:

git clone https://github.com/Meeting-Baas/viewer cd viewer -

Install dependencies:

pnpm install -

Set up environment variables:

cp .env.example .envFill in the required environment variables in

.env. Details about the expected values for each key is documented in.env.example -

Start the development server:

pnpm dev

The application will be available at http://localhost:3000

How It Works

- Authentication: Users authenticate through the centralized auth system

- Meeting Selection: Browse and select from your recorded meetings

- Video Playback: Stream meeting recordings with full video controls

- Transcript Navigation: View synchronized transcripts with word-level accuracy

- Interactive Features: Click transcript words to navigate video timeline

Data Integration

The viewer connects directly to your Meeting BaaS account, pulling real meeting recordings and transcripts from your bots. This eliminates the need for mock data and provides immediate access to your actual meeting content.